In a Digital World, Data = Identity

Identity can be broken down into two distinct aspects. The first is “the fact of being who or what a person or thing is⁵.” My earlier post, The Impact of Digital Identity, focused on the ways in which blockchain technology can be used to create a decentralized personal identity tied to a unique and verifiable “digital fingerprint.” The post that follows will focus mainly on the second aspect of identity, which can be defined as “the characteristics determining who or what a person or thing is⁵.”

We leave digital footprints everywhere we go. Advancements in voice technology and the corresponding proliferation of in-home devices mean data collection is no longer restricted to our online lives. All of our steps can be pieced together to create an accurate picture of what we do, what we like, who we talk to, what we spend money on, and as a result, who we are.

I won’t go into the prevalence of large-scale data breaches or the role user data plays in the business models of many “free” and widely used Internet services. Suffice it to say that we have lost control of our data, and as a result, we have little control over this component of our digital identity.

Why it Matters: Unintended Access and Unintended Inferences

Supreme Court Justice Louis Brandeis perhaps most aptly described the historical view towards privacy as “the right to be let alone.” Now privacy would be better described as “the ability to control data we cannot stop generating.¹” More importantly, as we generate more and more data each day, this data now gives rise to “inferences that we can’t predict.¹” Quite simply, we do not understand the extent to which our data is being collected, with whom it is being shared, or the ways in which it is being utilized to derive insights about our identity. Sometimes our data is used in non-obvious ways. For example, machine learning can be applied to Google searches to draw inferences related to health and language patterns can be detected in anonymously written text / code to infer authorship, among countless other examples.

Even if we have come to accept that our data is for sale, the purpose for which it is being “purchased” is not often clear.

Traditionally, security and privacy have been two distinct fields where security involved the safeguarding of data and privacy involved the protection of user identity. As data is now a primary component of digital identity, the two have blended and more emphasis is being placed on privacy, which has historically taken a back seat to more pressing security concerns. In other words, in a world where prolific machine learning applications raise concerns about unintended inferences, protection against unintended access to data becomes a heightened priority¹.

Governments have taken notice and have begun to implement data privacy regulation (Europe’s GDPR, for example.) However, data privacy regulations will likely be ineffective as our ability to meaningfully consent to data collection is diminished in an environment where unintended usage and unintended inferences impair our ability to value access to our data¹.

This doesn’t mean that users or organizations shouldn’t share their data. Keeping data private doesn’t mean data has to be kept in silos, the real issue is unintended access.

Blockchain technology can help by facilitating models that allow for minimal disclosure of sensitive information and mechanisms through which data owners can be commensurately compensated for allowing access. Privacy-focused blockchains can also allow for a more secure way to exchange information.

Minimal Disclosure Models

Users repeatedly disclose non-relevant but sensitive information when transacting online. For example, if a company needs to prove that Alice is old enough to rent a car, the rental car company might ask Alice for a copy of her driver’s license, which contains her address, her driver’s license number, and other demographic information in addition to her date of birth, which she might not want to share. The rental car company really only needs to know that she is old enough to rent a car, they don’t need to know that she is 5’6’’ and lives on Center St. They don’t even need to know her exact birthdate, they just need to know that she is above a certain age. Repeatedly sharing unnecessary information with multiple parties creates more points of vulnerability. Minimal disclosure models leverage blockchain technology to create systems in which relevant data is disclosed to querying parties, while non-relevant data is kept private, greatly reducing the transmission of and number of parties storing sensitive identifying information.

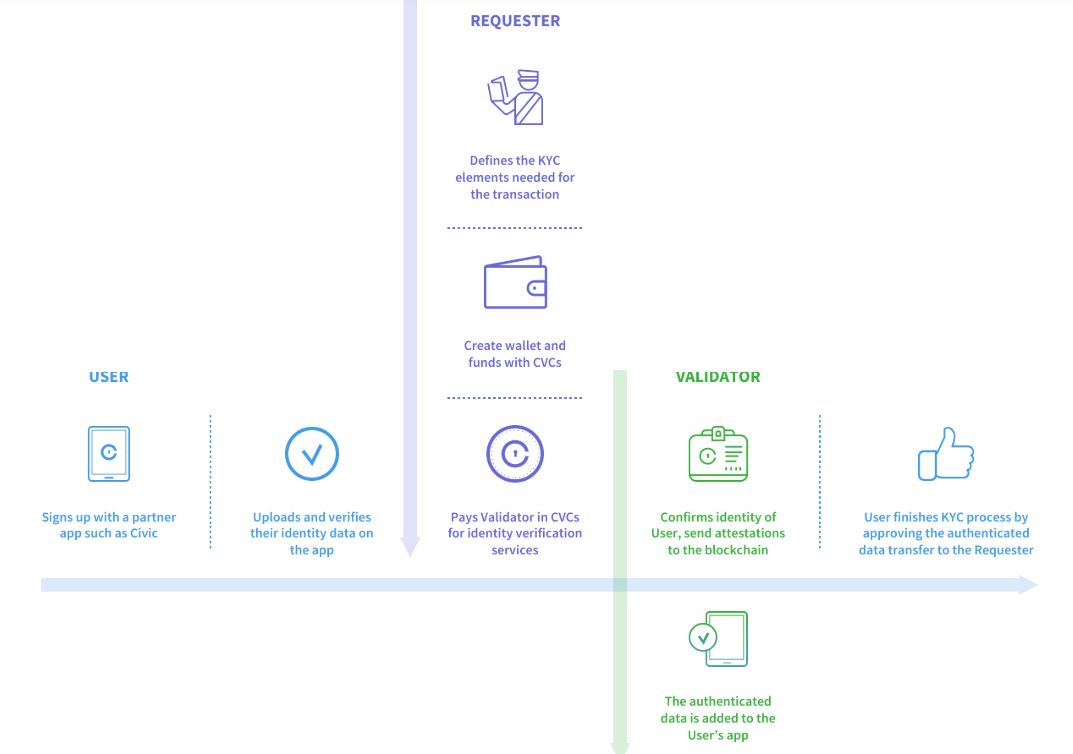

Civic has developed one such minimal disclosure model, focused on utilizing certificate authorities to create a “re-usable KYC.” Civic has created a system in which previously audited PII (personally identifying information) can be used to assure third parties of a person’s identity without the need to re-share the underlying PII. Using the example from above, with Civic, Alice only has to go through the KYC process once, and then the entity that verified her KYC (this step unfortunately still requires standard forms of ID) can provide attestations that Alice’s PII meets certain criteria. More specifically, the verifying entity can provide an attestation to the car rental company that Alice is above the minimum age required to rent a car without revealing any additional information about Alice. The Civic token (CVC) is used to incentivize third-party validators to provide attestations and can also be used to purchase “identity-related products” such as secure login / registration, multi-factor authentication, etc.

Just as sharing the same sensitive data with multiple parties creates vulnerabilities, using the same user ID and password across multiple platforms is not only bad policy from a security standpoint but can lead to association and tracking of accounts. With this in mind, Microsoft has designed its own minimal disclosure model targeted at authentication.

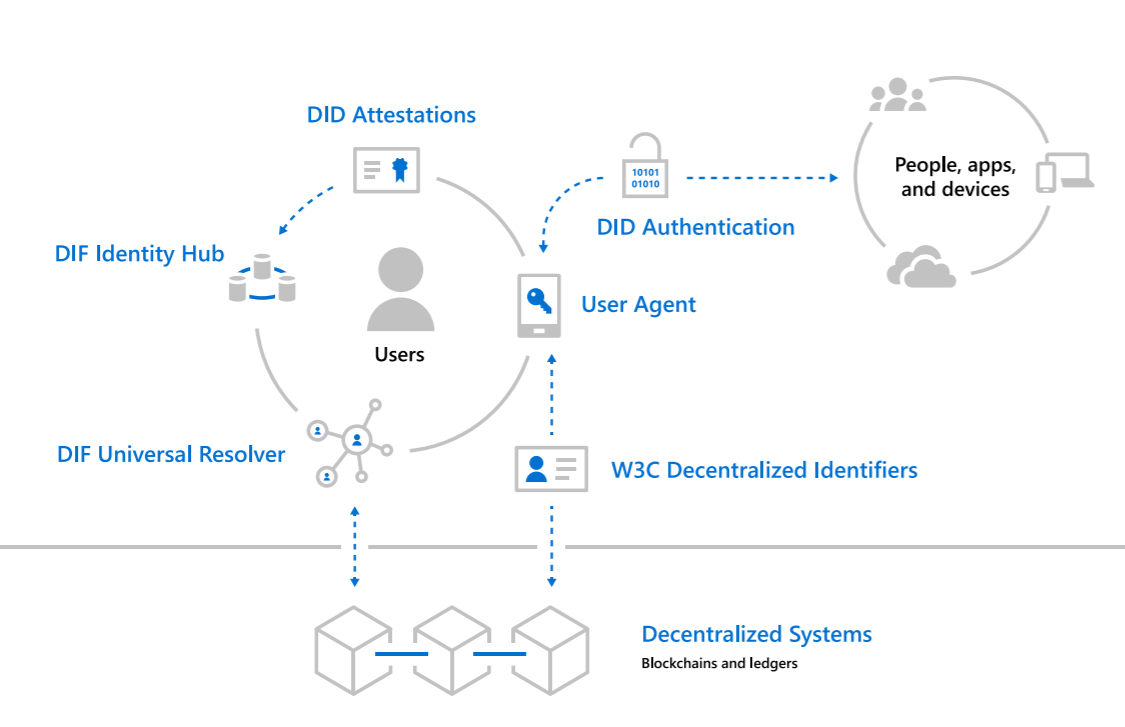

Microsoft has designed an open source, interoperable Layer 2 DID implementation in which a user creates a DID which is then linked to non-PII data. A user’s actual identity data (PII) resides encrypted off-chain and under control of the user. DIDs are user-generated and not limited to one per account since the idea is to avoid having one set of log-in credentials that can be traced and tracked across multiple platforms or service providers. DIDs can either be public (when you want an interaction linked to you in a way that can be verified by others) or pairwise (in the event that privacy is important and therefore interactions need to be isolated and correlation prevented.)

To walk through a concrete example, lets say that Alice wants to authenticate with an external party. Alice would disclose a DID to that party, and that party would look up the disclosed DID through the Universal Resolver, which would then return the matching non-PII metadata corresponding to that DID. The external party then creates a “challenge” using the public key references in the metadata and performs a “handshake” with Alice, proving that Alice is the owner of the DID. To prevent creation of “false identities,” attestations may be required initially until a level of credibility is established through multiple attestations or endorsements. Organizations requesting authentication can require multiple attestations for higher stake interactions.

Coinbase is also focused on identity, running a dedicated team focused on the topic and recently acquiring Distributed Systems, a company focused on decentralized identity. The company seems to be focused on minimal disclosure, in addition to other aspects of identity, as the company highlighted how decentralized identity could let a user prove that they have a relationship with the Social Security Administration without producing an actual copy of their SSN. While the social security administration and the DMV are currently the strongest purveyors of identity in the U.S., as the world becomes increasingly digital, Coinbase believes this model may ultimately extend to social media posts, photos, and other components of one’s digital identity.

Monetizatable Data Ownership

While minimal disclosure models focus mainly on protecting personal identifiers (SSN, DOB, and other PII), the non-PII data points that comprise a users’ online identity also need to be protected. If users could own their own data, and control access to it, the value of this data would theoretically accrue to its owner rather than the platforms that currently collect it (Google, Facebook, Amazon.) Governments have acknowledged that “data has value, and it belongs to you” with California Governor Gavin proposing a “digital dividend” that allows consumers to share in the profits of tech companies that have been “collecting, curating and monetizing” their users’ personal data⁶. However, this approach doesn’t allow for commensurate compensation and equates to little more than a tax on Big Tech companies, evenly distributed to individuals. Instead, blockchain technology allows for a more dynamic system in which users control their own data and can directly monetize access to that data, commensurate to the level of access they choose to provide.

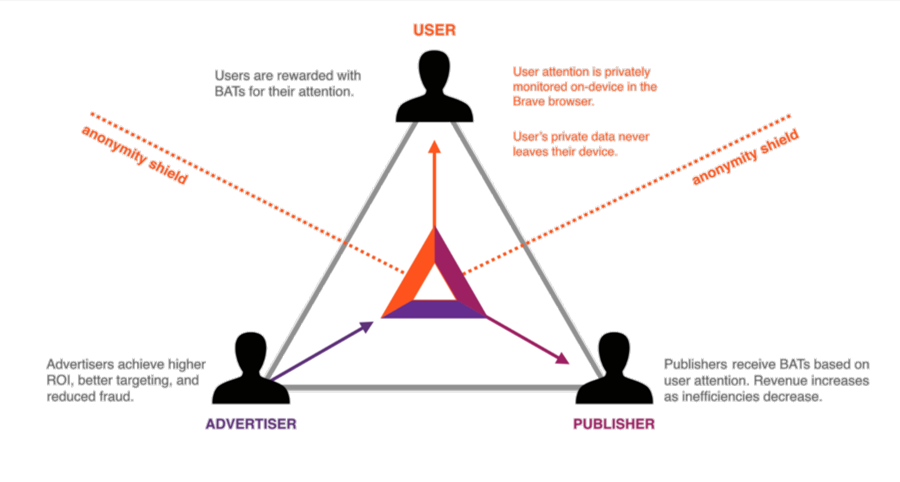

There are several blockchain companies, in various stages of usage and development, that aim to create marketplaces for users to monetize their own data. BAT is an example of one such platform. BAT stands for Basic Attention Token and is an ERC-20 token that serves as a utility token traded between advertisers, publishers, and users within a blockchain-based digital advertising platform. In the system, advertisers grant publishers BATs based on the measured attention of users. Users also receive BATs for participating and they can choose to donate them back to publishers or use them within the platform. In the future, advertisers could participate in a system where a user receives one BAT in exchange for being served one ad. Zinc is another blockchain-based platform with a similar goal. Likewise, Vetri is a blockchain-based data marketplace through which users can sell anonymized data to marketers in exchange for VLD tokens that can be used to purchase gift cards within the platform.

Other examples of such marketplaces include Fysical and Steemit. Fysical is creating a platform for the exchange of location data and currently publishes over 15 billion data points from more than 10,000,000 mobile devices every month, according to the company. Steemit is a platform that allows users to monetize Reddit-style, user generated content by earning tokens when their contributions get upvoted.

Data Exchange

Unintended access to data can also occur during data exchange, even when that data is anonymized. This is problematic since data exchange is necessary for continued innovation. Sharing medical and genomic data across medical institutions could accelerate the discovery of new treatments, conducting data analytics across financial institutions could avert financial crises, and sharing of driving data is likely imperative to the development of autonomous vehicles⁷. While blockchain technology can facilitate the exchange of data between untrusting parties, this data exchange still remains susceptible to privacy issues. Luckily, there are several blockchain companies focused on building privacy-focused networks from the ground up so that data can be exchanged in a way that prevents unintended access to underlying identifiers.

Oasis Labs is one such company. Oasis Labs is taking a full-stack approach to privacy, utilizing trusted execution environments (secure enclaves), secure multi-party computation, zero-knowledge proofs, and differential privacy. This limits the parties that have access to data at the protocol layer and limits data leakage of anonymized data at the application layer. Enigma is another project focused on creating a scalable privacy protocol, utilizing similar privacy techniques.

Challenges

Since no blockchain article is complete without this list… and again, this is not a complete list. Check out The Impact of Digital Identity and Blockchain’s Battle with Financial Inclusion, by my friend and colleague Bosun Adebaki, for more challenges related to digital identity. All the usual blockchain limitations also apply.

Ownership Rights: While the idea of an individual controlling access to, and thereby monetizing, their own data sounds appealing, in practice it can be challenging. One issue with creating a marketplace for the exchange of individual data is that data property rights have yet to be defined. Once data is shared with a third-party it is very difficult to define ownership and to prevent a secondary market for this information from forming once the information is known.

Valuation and Willingness to Pay: It will also be difficult to determine the value of different data or the willingness to pay for privacy initially, especially since users have been giving their data away, practically for free, for years. Furthermore, it is unclear if the $/month an individual might make from personal data monetization will be enough for the average consumer to offset the current user experience friction (key management, etc.) and uncertainties.

Recourse: Recourse in the case of abuses is also unclear in decentralized data exchange. In a recent video interview, Mark Zuckerberg spoke about researching ways to replace Facebook Connect, the social media giant’s single sign-on (SSO) application, with a more distributed system. However, he also posed some of the same questions raised above, asking “The question is, do you really want that? Do you have more cases where, yes, people would be able to not have an intermediary but there’d be more instances of abuse and recourse would be much harder?”

Still, the need to securely exchange data in certain industries is imperative to the viability of the business (autonomous driving, for example) and therefore the value proposition to enterprises is more likely to be high enough to offset any pain points in user experience and/or more active management.

Leave a Reply